It took Netflix three and a half years to reach 1 million users after introducing its groundbreaking, web-driven DVDs-by-mail subscription service in 1999. That was quite an accomplishment, given that people buying into new technologies at that time were considered a niche audience of first adopters unafraid to live on the cutting edge.

In the early 2000s, it took Airbnb two and half years to attract a million users, Facebook 10 months and music streaming service Spotify just five months to reach that audience size — a sign of increasing consumer comfort with innovative tech services that could add value to their daily lives. When Instagram attracted a million users after less than three months in 2010, it was a big deal, with industry watchers calling out the “insane growth” of the photo-sharing app.

If hitting a million users is a key milestone for turning an untested tech service into a mainstream destination, then think about this: OpenAI’s ChatGPT, the generative AI chatbot that debuted on Nov. 30, 2022, reached 1 million users in five days.

Five days.

That’s mind-blowing.

Then think about this: ChatGPT drew 100 million users in just two months.

It speaks to the attention we’re all giving to a new generation of chatbots able to have human-like conversations. A year after its launch, ChatGPT has over 150 million unique users (who have to set up an account to use the site) and hosted nearly 1.7 billion visits in November, making it one of the world’s top online destinations, according to Similarweb. The researcher tracks the adoption of today’s most popular generative AI chatbots, including ChatGPT, Google Bard, Microsoft Bing, Character.ai and Claude.ai.

What’s driving all that interest? The potential new use cases chatbots promise, despite privacy and security concerns about how they work and how they might be weaponized by bad actors. While AI has been part of our tech for decades — a large percentage of your Netflix and Amazon recommendations are based on an AI algorithm, for instance — gen AI is something else.

These chatbots are based on a large language model, or LLM, a type of AI neural network that uses deep learning (it tries to simulate the human brain) to work with an enormous set of data to perform a variety of natural language processing tasks.

What does that mean? They can understand, summarize, predict and generate new content in a way that’s easily accessible to everyone. Instead of needing to know programming code to speak to a gen AI chatbot, you can ask questions (known as “prompts” in AI lingo) using plain English. Version 3.5 of OpenAI’s GPT LLM, for instance, is trained on 300 billion words. Depending on what data it’s been fed, a chatbot can generate text, images, video and audio; do math calculations; analyze data and chart the results and even write programming code for you — often delivering results in seconds.

When it arrived in late 2022, ChatGPT made AI instantly and easily available for everyday people to access and try out. The software from OpenAI rocketed to 1 million users in just five days.

“Generative AI has been the subject of intense consumer excitement, especially with ChatGPT, because it really has brought a lot of tangibility to consumers,” says Brian Comiskey, a program director for the Consumer Technology Association. That’s why AI will be one of the big themes at the CTA’s annual Consumer Electronics Show in Las Vegas starting Jan. 9. “Consumers can see AI working for them in a lot of ways: I put in an input and I get a response back. I can test it out.”

The testing you might consider doing as 2024 unfolds might include prompting a chatbot to do things that may have seemed impossible or taken you a lot of time, energy and resources before — like writing a short story about fishing in the style of Ernest Hemingway or summarizing a book or scientific study. You could plan a Taylor Swift-themed dance party, like CNET’s Abrar Al-Heeti did, or create a metaverse for a new game, plan a travel itinerary to Machu Picchu, have David Attenborough narrate your life, plan a meal with enough variety to satisfy meat eaters, vegetarians, vegans and the gluten-free or become a fashion designer and create a corduroy-inspired collection. You can even have a theoretical conversation with Jesus or Jane Austen.

The ability to have that back-and-forth with a human-sounding assistant is the big deal here, says Andrew McAfee, a principal research scientist at the MIT Sloan School of Management. “For the first time ever, we have created a technology that understands human language.”

While today’s chatbots aren’t really “artificial intelligences” because they’re not thinking, sentient entities that truly know and understand the world as humans do, a generative AI chatbot “can look at a stream of words and figure out what the person is trying to say, and respond to that prompt or that request,” says McAfee. “It’s a pretty remarkable feat.”

That’s why you should get up to speed on these chatbots — what they are, how they work and the opportunities and challenges they pose to humanity. These tools are literally changing the conversation, pun intended, around the future of work and education and how we may soon go about day-to-day tasks. So consider this an introduction to generative AI, including some practical tips about how you can start experimenting with some of the most popular tools today.

Don’t just take my word for it. I asked ChatGPT why we humans should know something about generative AI and tools like ChatGPT. Here’s what it said: “Knowing about generative AI and tools like ChatGPT empowers you to leverage the latest advancements, explore creative possibilities, enhance productivity, improve customer experiences, and contribute to the ethical and responsible use of AI.”

Old jobs, new jobs, more jobs?

The expected productivity and profit boost that automated tech could help deliver are already leading businesses to think about what they’ll expect from their human employees as soon as this year.

MIT’s Sloan School of Management partnered with the Boston Consulting Group and found that generative AI can improve performance by as much as 40% for highly skilled workers compared with those who don’t use it. Software engineers can code up to twice as fast using gen AI tools, according to studies cited by the Brookings Institute.

LinkedIn surveyed CIOs, CEOs, data scientists, software engineers and other heavy data users and asked them to use generative AI to see how much time they saved on tasks such as drafting emails, analyzing text and creating documents. What they said is that tasks that would now take them 10 hours manually could take them five to six hours less. That translates into spending 50% to 60% less time on some routine tasks so you can instead devote attention to more rewarding or higher-value work.

Most Americans (82%) haven’t even tried ChatGPT and over half say they’re more concerned than excited by the increased use of AI in their daily life, according to the Pew Research Center. Researchers there have started identifying jobs that may be affected in some way by generative AI. They include budget analysts, tax preparers, data entry keyers, law clerks, technical writers and web developers. Think roles whose tasks include “getting information” and “analyzing data or information,” Pew said.

Before you start worrying that AI will eat all the jobs, Goldman Sachs cautions that such concerns may be overblown, since new tech has historically ushered in new kinds of jobs. In a widely cited March 2023 report, the firm noted that 60% of today’s workers are employed in occupations that didn’t exist in 1940.

Even so, the firm predicts the labor market could face “significant disruption.” After reviewing 900 job roles, Goldman Sachs’ economists estimated that about two-thirds of US occupations are already exposed to some degree of automation and that “generative AI could substitute up to one-fourth of current work.”

“Despite significant uncertainty around the potential of generative AI, its ability to generate content that is indistinguishable from human-created output and to break down communication barriers between humans and machines reflects a major advancement with potentially large macroeconomic effects,” Goldman Sachs’ economists concluded.

Putting aside the very real debate about whether gen AI-produced content is truly “indistinguishable” from human-created output (this story was completely written by a human, by the way), the point is this: What should today’s — and tomorrow’s — workers do?

The experts agree: Get comfortable with AI chatbots if you want to remain attractive to employers.

Instead of focusing on generative AI as a potential job killer, lean into the idea that chatbots can serve as your assistant or copilot, helping you do whatever it is better, faster, more effectively or in entirely new ways, thanks to having mostly reliable supercomputer you can converse and collaborate with (“Mostly reliable” refers to chatbots’ hallucination problem. Simply put, AI engines have a tendency to make up stuff that isn’t true but sounds like it’s true. More on that later.)

Calling all prompt engineers

The tech has already created a new kind of job called “prompt engineering.” It refers to someone able to effectively “talk” to chatbots because they know how to ask questions to get a satisfying result. Prompt engineers don’t necessarily need to be technical engineers, but rather people with problem-solving, critical thinking and communication skills. Job listings for prompt engineers showed salaries of $300,000 or more in 2023.

Ryan Bulkoski, head of the AI, data and analytics practice at executive recruitment firm Heidrick & Struggles, says upskilling employees and having leaders be better informed about AI are “critical” today because it will take time to build an “AI-educated workforce.”

“If a company says, ‘Oh I want someone who has five years of experience as an AI prompt engineer, guess what? It’s not in existence — that role only came up in the last 18 months,” he says.

That’s why becoming comfortable with chatbots should be on your 2024 to-do list, especially for knowledge workers who will be the “most exposed to change,” the job site Indeed.com found in a September report. It examined 55 million job postings and more than 2,600 skills to determine which jobs and skills had low, moderate and high exposure to generative AI disruption.

More-experienced workers might want to start that upskilling work sooner rather than later. Researchers at the University of Oxford found that older workers may be at a higher risk from AI-related job threats because they might not be as comfortable adopting new tech as their younger colleagues.

“When the pocket calculator came out, a lot of people thought that their jobs were going to be in danger because they calculated for a living,” MIT’s McAfee says. “It turns out we still need a lot of analysts and engineers and scientists and accountants — people who work with numbers. If they’re not working with a calculator or by now a spreadsheet, they’re really not going to be very employable anymore.”

A few ways you can play with gen AI today

Generative AI’s ability to have a natural language collaboration with humans puts it in a special class of technology — what researchers and economists call a general-purpose technology. That is, something that “can affect an entire economy, usually at a national or global level,” Wikipedia explains. “GPTs have the potential to drastically alter societies through their impact on pre-existing economic and social structures.”

Other such GPTs include electricity, the steam engine and the internet — things that become fundamental to society because they can affect the quality of life for everyone. (That GPT is different, by the way, from the one in ChatGPT, which stands for “generative pre-trained transformer.”)

You can engage with AI chatbots in many ways. Most tools are free, with a step up to a paid subscription plan if you want a more robust version that works faster, offers more security and/or allows you to create more content. There are caveats in using all these tools, especially when it comes to privacy. Google Bard, for instance, collects your conversations, while ChatGPT says it collects “personal information that is included in the input, file uploads, or feedback that you provide to our services.” Read the terms of service or check out privacy assessments from third parties like Common Sense Media.

“You should at least try [these tools] to get some idea beyond the news headline of what they can and can’t do,” said David Carr, a senior insights manager at Similarweb. “This is going to be a big part of how the internet changes and how our whole experience of work and computing changes over the next few years.”

A way with words: OpenAI’s chatbot is at the top of most people’s lists to try. A few months after its debut, actor Ryan Reynolds asked ChatGPT to write a TV commercial for his Mint Mobile wireless service and shared the result on YouTube, where it got nearly 2 million views. His take on the AI-generated ad? “Mildly terrifying but compelling.”

It’s not the only AI copilot able to answer questions, brainstorm ideas with you, summarize articles and meeting notes, translate text into different languages, compose emails and job descriptions, write jokes (apparently not very well) or help you figure out how to do something — like learn a new language.

ChatGPT will write entire essays, poems, business plans and more, but it can also answer your questions about writing, grammar and style.

There’s also Google Bard, Microsoft Bing (which is based on OpenAI’s technology), Anthropic’s Claude.ai, Perplexity.ai and YouChat. In November, people spent between five and eight minutes playing with these tools per visit, according to Similarweb’s Carr. And while ChatGPT leads in visits right now, followed by Bing with 1.3 billion, there were nearly half a billion visits to the other top sites that month.

What does that mean? Generative AI should now be considered a mainstream tech, Carr says. These tools are all “basically doing things that were impossible a couple of years ago.”

Turning words into images: While ChatGPT draws most of the attention, OpenAI first released a text-to-image generator called Dall-E in April 2022. You type in a text prompt, which turns into visual interpretations of your words — things like “portrait of a blue alien that is singing opera” or a “3D rendering of a bouldering wall made of Swiss cheese.”

Dall-E 3, whose name is a mashup of Pixar’s WALL-E robot and surrealist painter Salvador Dalí, isn’t the only text-to-image generator promising to produce your next masterpiece in seconds. Popular tools in this category include Midjourney, Stable Diffusion, Shutterstock’s AI image generator, Canva Pro, Adobe Firefly, Craiyon, DeviantArt’s Dreamup and Microsoft’s Bing Image Creator, which is based on Dall-E.

Adobe’s Firefly website lets you create words in zany AI-generated font styles like “holographic snakeskin with small shiny scales,” “realistic tiger fur” or “black leather shiny plastic wrinkle.” The company’s free Adobe Express app is suited to flyers, posters, party invitations and quick animations for social media posts, says CNET’s Stephen Shankland. He’s been testing AI image tools and giving hard thought to how they’re causing people to rethink the truth behind photos.

Video and audio: It’s not just words and images getting an AI assist. You’ll find text-to-video converters, including Synthesia, Lumen5 and Meta’s Emu Video that are being used to reimagine how films, videos, GIFs and animations are created. There are text-to-audio generators, like ElevenLabs, Descript and Speechify, and text-to-music generators including Stable Audio and SongR. Google is testing a tool called Dream Track that lets you create music tracks for YouTube videos by cloning the voices of nine musicians. — including John Legend, Demi Lovato and Sia — with their permission.

If you’re looking to experiment, CNET video producer Stephen Beacham created a step-by-step tutorial showing how to use ElevenLabs’ AI voice generator to clone your own voice.

You can probably think of lots of ways that cloning someone’s voice might be for nefarious purposes (Hi Grandma, can you send me some money?) President Joe Biden called out worries over AI voice cloning tech, telling reporters after signing an executive order that aims to put guardrails around the use of AI that someone can use a three-second clip of his voice to generate an entire fake conversation. “When the hell did I say that?” Biden joked after watching an AI deepfake of himself.

There are compelling potential use cases. Spotify is testing a voice translation feature that will use AI to translate podcasts into additional languages in the original podcaster’s voice.

New York City Mayor Eric Adams used an audio converter to deliver a public service message to city residents in 10 languages, although he got into trouble for not telling people he’d gotten an AI assist to make it sound as though he were speaking Mandarin.

His disclosure gaffe aside, Adams made a good point about using tech to reach audiences who have “historically been locked out” because translating messages into different languages might not be feasible due to time, resources or cost. Said Adams, “We are becoming more welcoming by utilizing tech to speak in a multitude of languages.”

Product recommendations and purchasing decisions: When you buy something online or on your mobile device, you’ll see that companies are already investing in generative AI to better answer product questions, troubleshoot, recommend new products and guide you through complex purchasing decisions.

Walmart, whose CEO, Doug McMillon, is one of the keynote speakers at CES, said it’s been adding conversational AI to help its 230 million customers find and reorder products for the past few years. If you’re in the market for a car, new services like CoPilot for Car Shopping say they can search dealers for you, as well as analyze and compare car specifications to help you pick the right model. Zillow added a natural language search to its site this year to guide buyers and renters as they look for their next home using phrases like “open house near me with four bedrooms” rather than requiring that you select a bunch of filters to narrow down your search.

There are ways you can use general chatbots, like ChatGPT, to help you with your product search, as CNET’s Caroline Igo did as part of a search for a new mattress.

Education: While students’ potential misuse of generative AI imagines a world with similar-sounding social studies reports on the Constitution, the US Department of Education sees potential in the tech. That includes helping teachers find and adapt materials for their lesson plans and using AI-powered speech recognition to “increase the support available to students with disabilities, multilingual learners, and others who could benefit from greater adaptivity and personalization in digital tools for learning.”

Sal Khan, founder and CEO of Khan Academy, gave a TED talk in April 2023 describing how generative AI can transform education, assuming we put the right guardrails in place to mitigate problems like plagiarism and cheating and to manage worries that students might outsource their assignments to a chatbot.

“We’re at the cusp of using AI for the biggest positive transformation that education has ever seen,” Khan said in a 15-minute presentation, How AI Could Save (Not Destroy) Education, which has over a million views. “The way we’re going to do that is by giving every student on the planet an artificially intelligent but amazing personal tutor. And we’re going to give every teacher on the planet an amazing, artificially intelligent teaching assistant.”

Khan Labs has already built AI-powered tutors for learners and assistants for teachers. Called Khanmigo, it’s available to Khan Academy members or for $4 a month ($44 a year).

Travel: Planning a flawless vacation itinerary is an art, but it can also be time-consuming. In theory, travel planning is the perfect task to outsource to AI, which can assemble a list of attractions curated to your interests and mapped out in a way that makes sense in terms of geography, pacing and even budget. That’s in theory.

In practice, your results may vary. Before you deploy an AI travel concierge, here are a few suggestions whether you use a general tool like ChatGPT or an AI itinerary generator, such as GuideGeek, Roam Around, Wonderplan, Tripnotes or the Out of Office app.

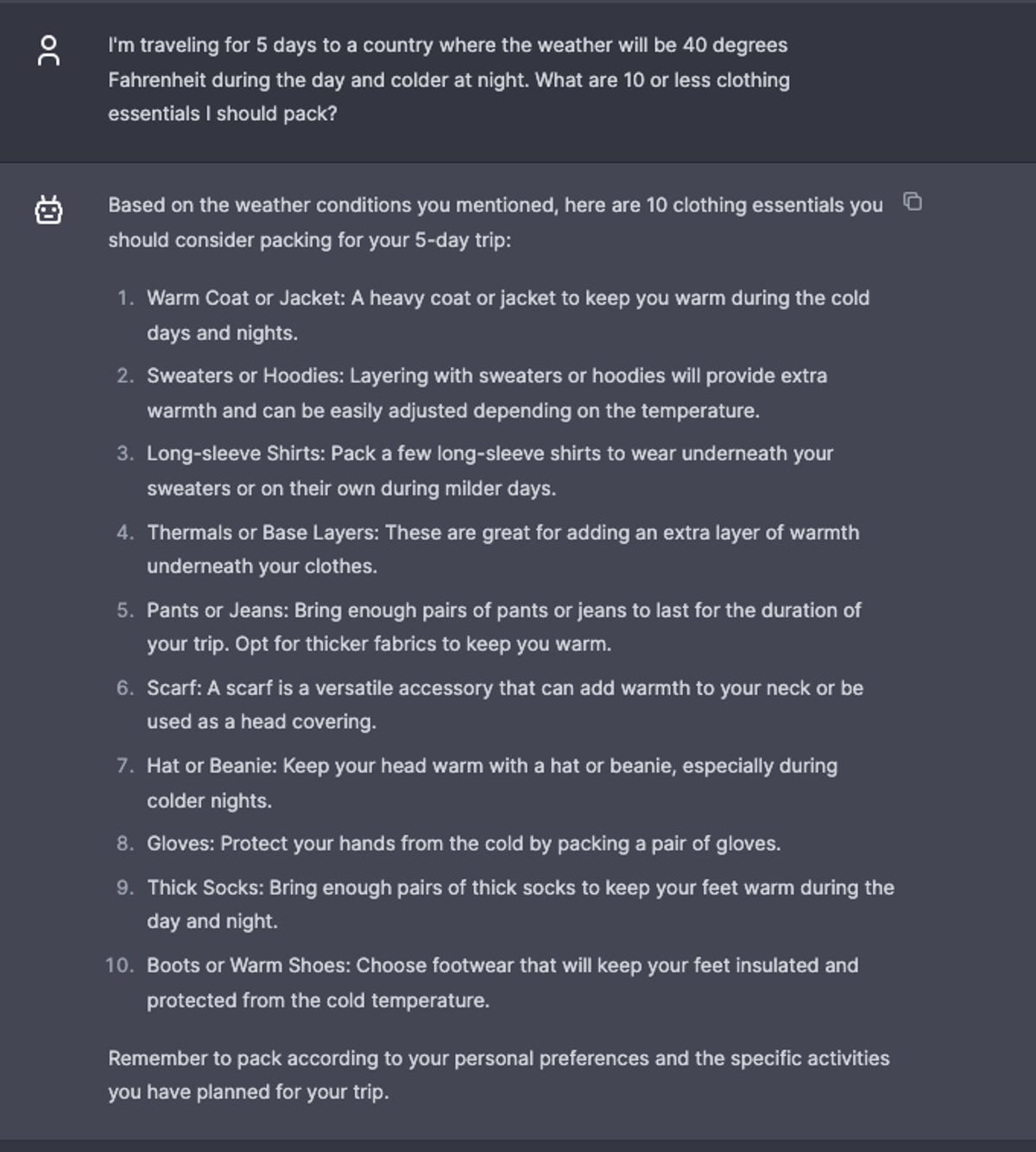

The more specific you are with your prompts to generative AI tools, the better the responses will likely be at addressing your needs. Here, ChatGPT offers advice to answer a question asking for advice on clothing to pack for a trip to a chilly destination.

First, keep in mind that the AI doesn’t think about your days the same way you do — grouping attractions according to the neighborhood and choosing somewhere for a light lunch to balance out that 20-course tasting menu you’ve planned for dinner. If you’re not careful, you could end up crisscrossing a city three times in an afternoon or dining on pizza for every meal.

Double-check everything. It may be that your AI itinerary makes sense geographically, but isn’t well-paced, cramming too much into a day. You may need to make adjustments for members of your group, including checking on accessibility and building in nap times (for overtired children and adults).

AI also rarely makes use of recent and real-time data, so before you set your heart on ticking off every natural wine bar and street food market an AI tool suggests, make sure the business still exists or has the same operating hours. CNET’s Katie Collins found this out firsthand when using these tools to map out an itinerary for her hometown of Edinburgh, Scotland.

From copilots to companions, of a sort: One way generative AI companies are working to get you comfortable talking to their chatbots is by giving the tech a personality or having it pretend to be someone famous. Or they’re calling their tools things like copilots, companions and assistants to divert your attention from them being, well, artificial and instead get you to buy into the idea they’re helpful collaborators, at your service.

Ascribing human-like qualities to non-human things like computers or animals — a concept known as anthropomorphism — isn’t new. Long before Siri and Alexa, there was ELIZA, a natural language processing computer program created in the 1960s at MIT.

Researchers at the Norman Nielsen Group have already seen that people engaging with chatbots are kind of treating them as humans. They’ve defined the “four degrees of AI anthropomorphism:” courtesy, which involves saying please, thank you or hello to a chatbot; reinforcement, or telling the AI “good job” so it starts to understand what you consider a positive response versus a less helpful one; roleplay, or asking the chatbot to assume the role of person with specific traits or qualifications, like “Give me the answer from the perspective of an airline pilot” and companionship, looking to the AI for an emotional connection.

Our tendency to anthropomorphize is why developers lean into characters, personas and the like. Video conferencing tool Zoom added an AI Companion, which it describes as a “smart assistant” that can help you draft emails and chat messages, summarize meetings and chat threads and brainstorm. Microsoft bills its AI Copilot as “your everyday AI companion.”

Meta created a cast of AI characters that the tech giant’s more than 3 billion users can interact with on its platforms, including Facebook, Instagram, Messenger and WhatsApp. They’re based on real-life celebrities, athletes and artists, including musician Snoop Dogg, ex-quarterback Tom Brady, tennis star Naomi Osaka, and celebrities Kendall Jenner and Paris Hilton.

Then there’s Character.ai, which lets you interact with chatbots based on famous people like Taylor Swift and Albert Einstein and fictional characters such as Nintendo’s Super Mario. While people spent about eight minutes with ChatGPT during their visits in November, visitors spent over 34 minutes engaging with Character.ai, according to Similarweb.

That very high engagement time points to Character.ai’s success in making the chatbot experience “more entertaining for the audience” and shifting attention from the fact the AI may not be telling you the truth, according to Carr. “They insulate themselves somewhat from the complaint about hallucinations because you’re talking with this fictitious character, right? It’s presented as more being just for fun, being a game,” he says. “That’s an interesting way of disarming some of those concerns.”

Not everyone is a fan of anthropomorphizing the tech, including those making their chatbots more human-like. Michelle Zhou, CEO of AI startup Juji, refers to the no-code-required chatbots you can create using her company’s technology as “assistants.”

“I don’t like the words ‘a copilot’ and ‘a partner,’ which means like they’re equal. AIs are not equal partners to us because they are much less knowledgeable. They still have many things to learn,” Zhou says. Instead, she’s settled on “parapartners” because the AI is a source of assistance and support — just like paralegals, who assist lawyers, and paramedics, who support doctors.

How to talk to a chatbot

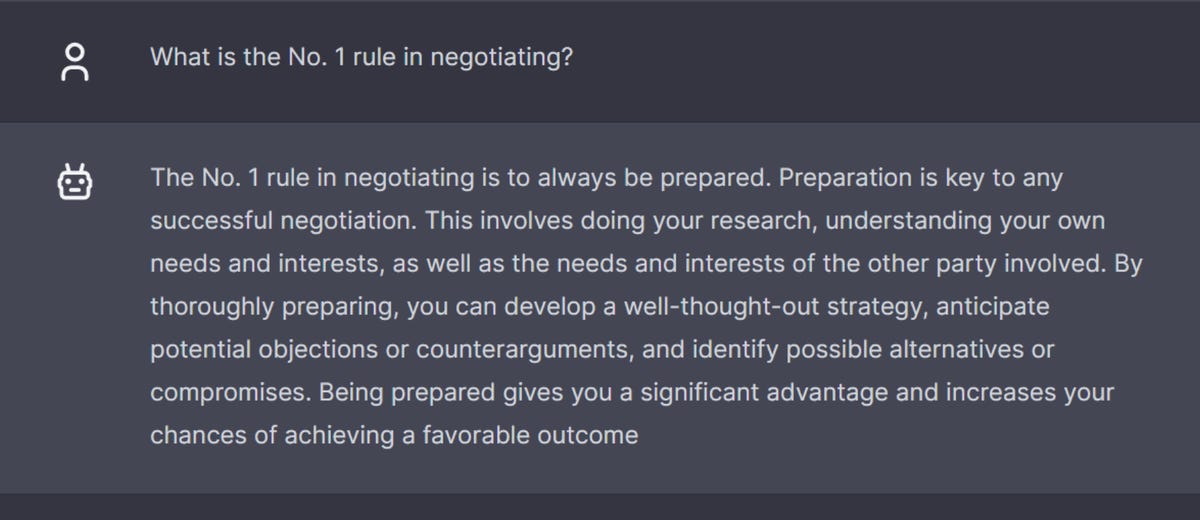

No matter which tool you try, your success will depend on having an effective conversation. That’s where prompt engineering comes in.

Forget Jarvis in the Iron Man movies or Hal from 2001: A Space Odyssey. Instead, think of today’s chatbots as very capable robots that can carry out specific tasks for you or, as some have described them, as autocomplete on steroids. They don’t know what a great story or a beautiful painting is. They only understand patterns and relationships based on the training data — words, images, numbers and other information — they’ve been fed.

To get good, effective and helpful output, you need to ensure the conversation you’re having with the machine is good, effective and helpful. It’s the GIGO principle — garbage in, garbage out. The way to avoid GIGO scenarios is by providing specific, descriptive information and background and content in your prompts. If you don’t learn some of the art of prompt engineering, you’re certain to be frustrated with the results.

A quick online search will bring up dozens, if not hundreds, of tutorials on how to write an effective prompt, whether you’re after text, an image, a video or something else. ChatGPT’s list of thought starters includes everything from “Teach me to negotiate,” “Draft a thank you note” and “rank dog breeds for a small apartment” to “Help me improve this job description.”

CNET’s sister site ZDNET has a prompt guide with tips to get you started. Talk to the AI like you would to a person — and expect that your conversation will require some back-and-forth. ZDNET’s David Gerwitz said. Be ready to provide context: Instead of asking “How can I prepare for a marathon?” Gerwitz suggests asking, “I am a beginner runner and have never run a marathon before, but I want to complete one in six months. How can I prepare for a marathon?”

Last, be specific in what you want. A 500-word story? A bullet list of talking points? Slides for a presentation deck? A haiku?

When it comes to images, CNET’s Shankland suggests using detailed, descriptive, elaborate wording. For more effective images of people, use emotional terms like excited, anxious or jubilant. If you’re stuck, search the internet with terms like “example prompts for generative AI images” to find cheat sheets you can copy and modify.

Some caveats

The powerful capabilities these tools put at your fingertips have led ethicists, governments, AI experts and others to call out the potential downsides of generative AI.

There are unanswered questions about what data is being used to feed these LLMs, with authors including Margaret Atwood, Dan Brown, Michael Chabon, Nora Roberts and Sarah Silverman claiming AI companies have ingested their copyrighted content without their knowledge, consent or compensation.

Because we don’t know what’s in the LLM stew, there are concerns about potential bias and a lack of diversity in these systems, which might lead them to perpetuate harmful stereotypes or discriminate against certain groups or individuals.

There are questions about privacy. The Federal Trade Commission is already investigating OpenAI for how it handles the personal data it collects. In November, the FTC voted on a resolution setting out a process for how it will conduct “nonpublic investigations” into AI-based products and services for the next decade.

Then there’s also that very real problem of hallucinations, which potentially undermines our trust in all of this tech. Google DeepMind researchers came up with the quaint term in 2018, saying they found that neural machine translation systems “are susceptible to producing highly pathological translations that are completely untethered from the source material.”

How big a problem are these hallucinations?

Researchers at a startup called Vectara, founded by former Google employees, tried to quantify it and found that chatbots invent things at least 3% of the time and as much as 27% of the time. Vectara is publishing a “Hallucination Leaderboard” that shows how often an LLM makes up stuff when summarizing a document.

As if hallucinations weren’t bad enough, there are also questions about how generative AI may threaten humanity, with some saying it could lead to human extinction. Sounds extreme, but then think about bad actors using it to design new weapons. Less extreme, but still concerning, they could generate misinformation as part of disinformation campaigns that mislead voters and sway elections.

Governments have taken note and are moving forward on creating guidelines and potential regulations. The Biden administration in November issued a 111-page executive order on the “Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.” That same week, the UK hosted an AI Safety Summit. Representatives from 28 governments, including the US, China and the European Union, signed the Bletchley Declaration, which aims to address how “frontier AI” — the most advanced, cutting-edge AI tech — might affect aspects of our daily lives, including housing, jobs, transportation, education, health, accessibility and justice.

In December, the EU signed what it’s described as “historic” AI legislation that will affect tech companies in the 27 countries in the EU and seek to protect 450 million consumers. The AI Act “aims to ensure that AI systems placed on the European market and used in the EU are safe and respect fundamental rights.” The main idea, say regulators, is to regulate AI based on its “capacity to cause harm to society following a ‘risk-based’ approach: the higher the risk, the stricter the rules.”

Underscoring all of these issues is an existential question: Just because you can do something with tech, should you? When it comes to building and using AI, we need to remember that people are making decisions about how, when and why to use AI tech. As good as an AI might become, it will never replace human intuition and our ability to understand nuance, subtlety and emotion in the decision-making process.

W. Russell Neuman, a professor of media technology at New York University and a founding faculty member of the MIT Media Lab, says we should look at this generative AI moment in context with other major revolutions; the development of language, the printing press and the Industrial Revolution, “where we could substitute machine power for animal power.”

ChatGPT is known for its humanlike responses to prompts, but it’s far from human itself. Behind the scenes, there are many, many people making generative AI happen, including OpenAI CEO Sam Altman, here speaking at his company’s DevDay event in November.

With generative AI, “all of a sudden we can substitute machine thinking, machine decision-making, machine intelligence for human intelligence. If we do that right, it has the kind of transformative power that each of those previous revolutions had,” says Neuman, author of Evolutionary Intelligence: How Technology Will Make Us Smarter.

Neuman agrees with Google CEO Sundar Pichai, who told 60 Minutes in April that AI “is the most profound technology that humanity is working on, more profound than fire or electricity. It gets at the essence of what intelligence is, what humanity is.”

“He’s thinking along the right lines — that’s another reason for taking all of these issues about ethics and control very seriously,” Neuman says. Instead of thinking about AI as something out there making decisions and telling us humans what to do, think of it as a collaborator or assistant that can be harnessed to empower and enable humanity.

Evolutionary intelligence is about remembering that “this is not just [about] the technology,” adds Neuman. “This is a change in how humans deal with things.”

What’s next

All the caveats aside, the conversation about generative AI won’t quiet down anytime soon, even after a management kerfuffle led to the near-collapse of OpenAI in November when its prominent CEO, Sam Altman, was almost ousted. There’s a discussion about how OpenAI is making decisions about its technology and about how its newest strategy will play out — the company is letting creators build custom chatbots, no programming skills required, using ChatGPT. Altman called these customized AI tools “GPTs” (not to be confused with the general-purpose technologies) and will sell them this year through an app store — just like Apple did when it popularized mobile apps for the iPhone.

Entrepreneurs are taking up the AI charge, with researcher GlobalData saying gen AI startups raised $10 billion in 2023, more than double the venture capital investments in generative AI for 2022.

Sidney Hough paused her undergraduate studies at Stanford University last year to create an AI startup called Chord.pub that collects people’s feedback from across the internet to offer “consensus on any topic.” She sees generative AI as a way to democratize information by offering the potential to let you “bring your own algorithm” to tech platforms.

“Right now, certain companies control the way everyone thinks and what information is distributed to whom and how information is prioritized,” says Hough, 21. In a generative AI world, “consumers can come to their social media networks or their search engines and be like, this is how I think information should be prioritized or this is how I want you to rearrange my information. AI opens up opportunities for that kind of granular control.”

Whether you think generative AI is great or problematic, it’s time to step up and consider how it should and shouldn’t be embraced by humans.

“Rather than being the Luddite,” Neuman advises, “you want to be the thoughtful, cautious, measured champion of how it can enhance human capacity, rather than compete with them.”

CNET Principal Writer Stephen Shankland and Senior European Correspondent Katie Collins contributed to this report.

Editors’ note: CNET is using an AI engine to help create some stories. For more, see this post.

Visual Designer | Zooey Liao

Video | Chris Pavey, Celso Bulgatti, John Kim

Senior Project Manager | Danielle Ramirez

Director of Content | Jonathan Skillings